views

Facial recognition, despite much protests and alarming premonitions by privacy advocates, looks set to become mainstream. Late last month, a Wall Street Journal article documented the use of facial recognition at sports stadiums in order to facilitate contactless entry of visitors. Over the past years, privacy debilitating tools like Clearview AI have underlined the threat that misuse of facial recognition technologies can present for the common people.

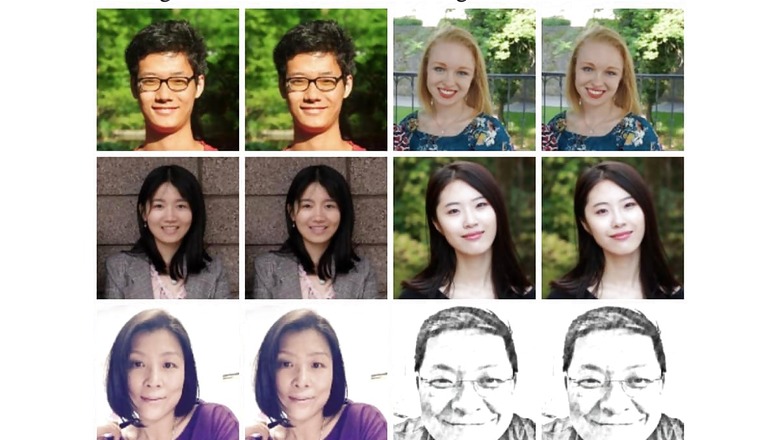

Taking on this very aspect, a team of researchers at the University of Chicago has designed a tool that makes fine alterations in pixels of a photograph to morph or ‘cloak’ a person’s face. The effect, the researchers have revealed, could successfully fool facial recognition systems marketed by the likes of Amazon and Microsoft.

Fighting against odds

The tool is named Fawkes, apparently in homage to the Guy Fawkes mask – a widely acknowledged symbol of resistance around the world. The research project has been discussed on Hacker News’ Ycombinator dashboard, where the efficacy of the ‘cloaking’ mechanism was put to test. In simpler terms, what the researchers have done is make minute shifts or changes in the pixels around a person’s face, in combination with other faces in a database. While the thread reveals that most such ‘cloaking’ techniques fail when images are compressed (which is most of the time in common internet browsing or social media usage), Fawkes reportedly holds up well even against high scale image compression.

According to the researchers, the systems that they tried out their technology against were facial recognition services offered by Amazon, Microsoft and Megvii, the latter being popular in China – the poster nation for surveillance states around the world. What’s particularly important to note is that these ‘cloaks’ or changes are not apparent to the naked eye, but represent fine structural changes that are sufficient to throw algorithms off their machine learning tracks.

Interestingly, a New York Times report on Fawkes reveals that Shawn Shan, the creator of the technology, is right now an intern at a Facebook team that works on finding new ways to prevent identity theft and misuse. It is Shan’s experience that lends perspective on the limitations that a technology like Fawkes has today.

Too late for action?

The NYT report quotes Clearview AI founder Hoan Ton-That as saying that a system like Fawkes would not only fail against a gargantuan facial recognition database such as Clearview’s own, but in fact make their recognition algorithms stronger. The real issue at hand here is that we have too many of our photographs posted online, so machine learning algorithms are a bit too adept at recognising our characteristic features that can help organisations pick you out from a crowded public space.

Opinion in public forum states that applied in a micro scale, a technology like Fawkes would not be a success. However, applied en masse, it can prove to be useful in preventing photos you post in public forum such as social media from being scrolled by facial recognition algorithms. With most facial recognition databases, including the Advanced Facial Recognition Software (AFRS) used by Delhi Police in India, scrolling faces from publicly available searches, such masking can prove to be a critical tool to stop blatant misuse of facial recognition technologies, and bring back some semblance of privacy.

With multiple accounts of documented, facial recognition technology usage by authority bodies across the world, a tool like Fawkes can help the biggest technology companies in the world to offer an additional degree of privacy cloaking for their users. Shan and his team, on this note, are building an app for personal usage too – to give users the ability to mask themselves from facial recognition services more efficiently.

The service is available for download for both Windows and Mac, and its entire documentation can be accessed here.

Comments

0 comment